Accelerate data center transformation with Cisco AI PODs

Align your AI infrastructure modernization for proven deployment speed and ease.

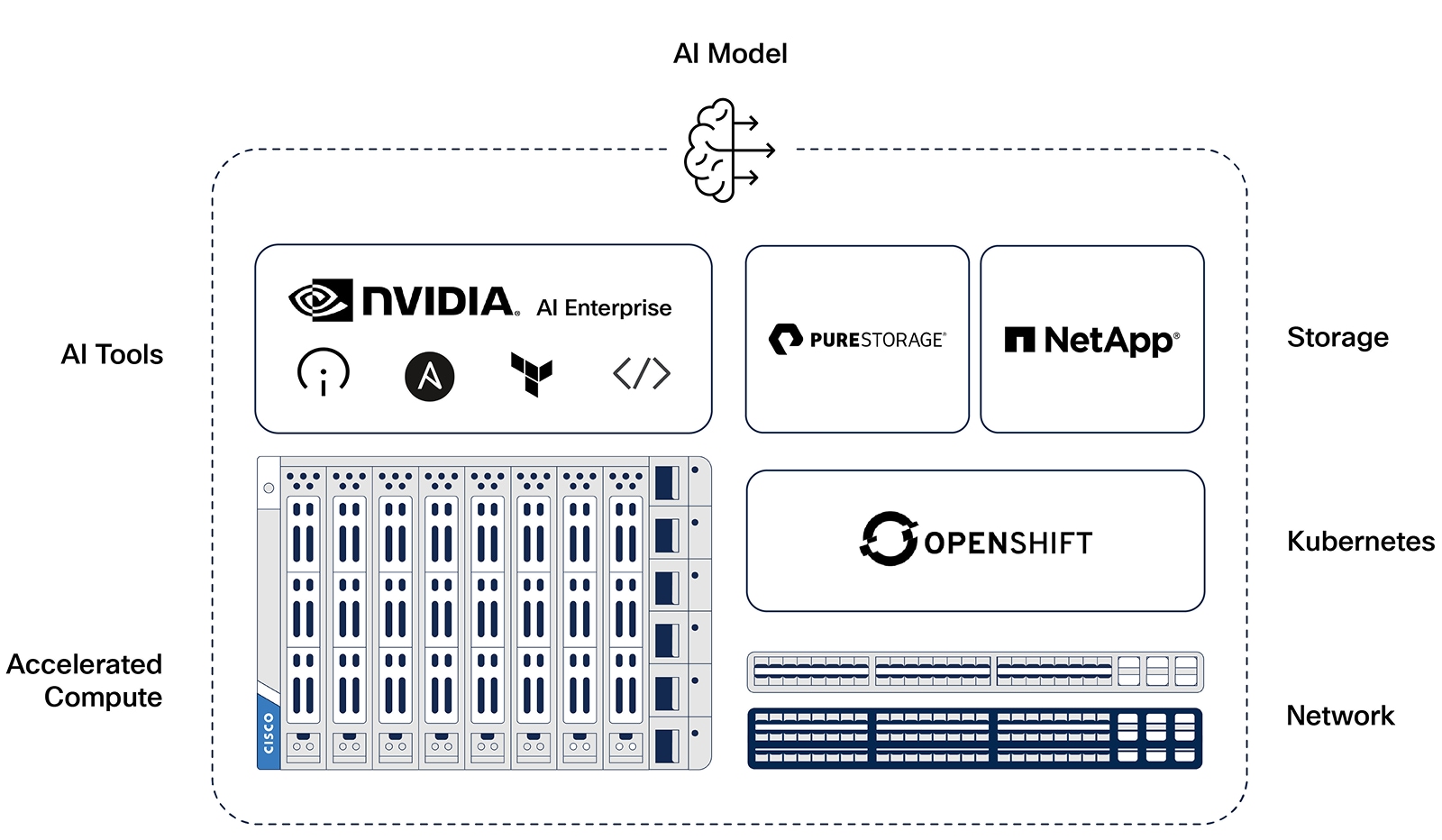

An integrated solution optimized for AI

Cisco AI PODs simplify and speed deployment of the entire infrastructure stack for inferencing.

Drive innovation, accuracy, and insight

AI PODs are available in hardware configurations that tailor CPUs, GPUs, compute nodes, memory, and storage for a variety of use cases.